edit: this project is now deployed on GitHub Pages:

https://wh0.github.io/nix-cache-view/

and the source is here:

I originally posted this project url, which is where I was developing it on Glitch. I still use this workspace to develop this project, so it might be in a weird state sometimes.

https://trusted-friendly-sesame.glitch.me/

view source: Glitch :・゚✧

cross posted:

There’s this format that the Nix package manager uses for distributing packages online, in these .nar files. I wrote a client-side app to parse those package files so you can explore what’s inside them without having to set up nix and download the package (and all its dependencies ![]() ). See the post linked above from the NixOS Discourse for instructions on how to use it, if you’re interested.

). See the post linked above from the NixOS Discourse for instructions on how to use it, if you’re interested.

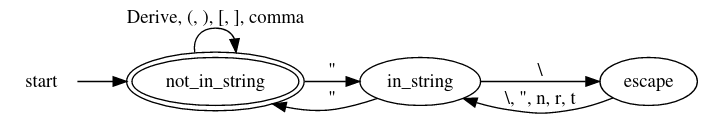

Nix also uses “derivation” files, which formally specify the instructions for building a package, and you can also distribute these online similar to the packages that they describe how to build. And they’re also in .nar files. I coded up an unglamorous webpage to view those as well, with hyperlinks going to various packages referenced in the build inputs, so you can thusly navigate the dependency tree.

Side node: The derivation files usually aren’t shared online. It’s more common to share a “flake,” which is a functional program that generates a bunch of derivations. However, due to the one giant flake representing all packages of the official “nixpkgs” distribution containing references to cryptocurrency mining software, the usual way of distributing the flake is not usable on Glitch. So I’m indeed transmitting derivation files, which allows me to pick and choose only specific non-cryptocurrency-mining-related build instructions. So it’s weird. And for that reason, I have not mentioned this second tool in my NixOS Discourse post ![]() .

.

That’s a brief overview of the project. It’s late as it always tends to be when I get around to posting on the forum. I’ll be back with some technical content on what it was like building this.

Last thread: Broadening Nix package builds to the NixOS "small" set